How to Train Your Stochastic Parrot: Large Language Models for Political Texts

Jake S. Truscott

Political Science Research and Methods (2025)

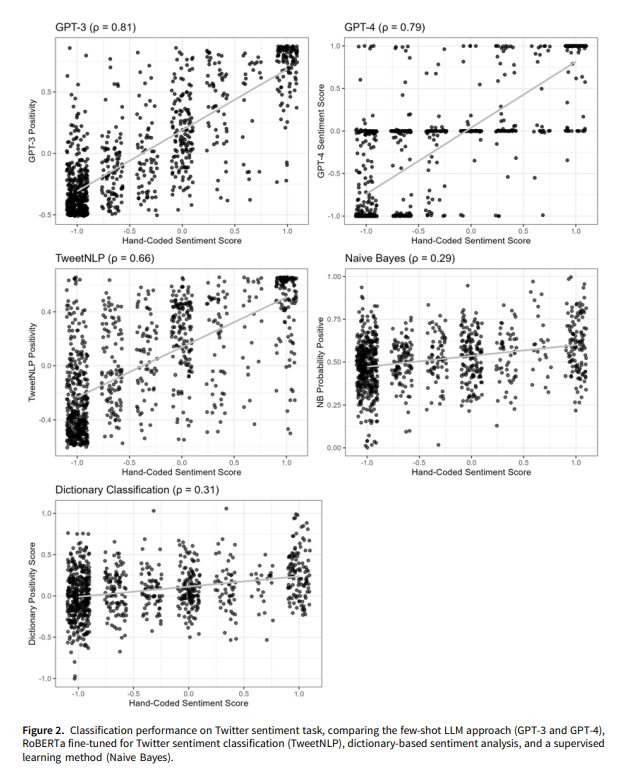

Abstract: We demonstrate how few-shot prompts to large language models (LLMs) can be effectively applied to a wide range of text-as-data tasks in political science - including sentiment analysis, document scaling, and topic modeling. In a series of pre-registered analyses, this approach outperforms conventional supervised learning methods without the need for extensive data pre-processing or large sets of labeled training data. Performance is comparable to expert and crowd-coding methods at a fraction of the cost. We propose a set of best practices for adapting these models to social science measurement tasks, and develop an opensource software package for researchers.

Citation: Ornstein, J. T., Blasingame, E. N., & Truscott, J. S. (2025). How to train your stochastic parrot: Large language models for political texts. Political Science Research and Methods, 13(2), 264-281.

Classification Performance on Twitter Sentiment Compared to Alternative Strategies